Build Your Own Serverless: part 4

In the part-3 of the build your own serverless series, we went over adding sqlite using Gorm, using a new hostname strategy, docker go client instead of exec.Command, and we added graceful shutdown to clean up resources.

In this post we will be covering advanced topics:

Support of versioning

Version Promotion (Canary, A/B & more)

Environment variables

Garbage collection of idle containers

Follow along to learn these advanced topics and take our serverless platform to the next level!

Subscribe for free to unlock exclusive content!

Subscribe

Email sent! Check your inbox to complete your signup.

Versioning & Environment Variables

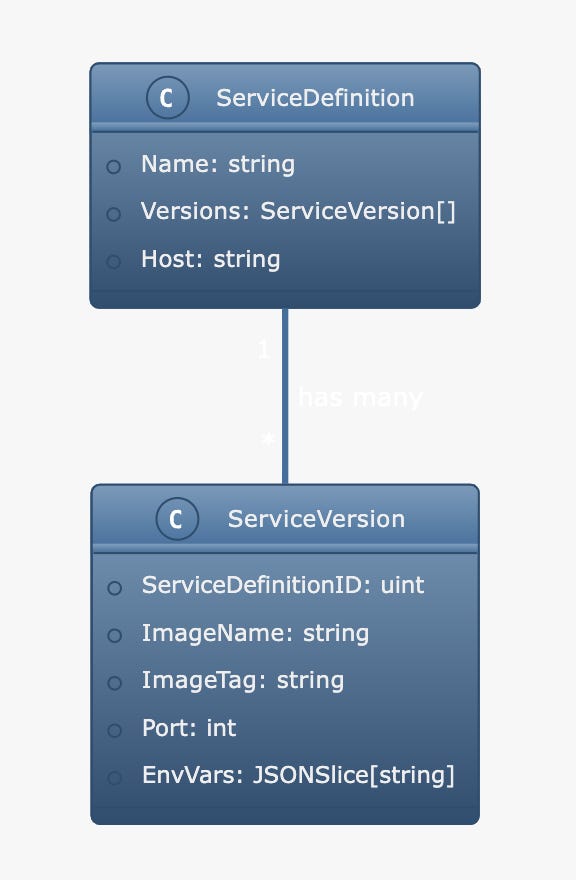

Versioning is a powerful capability that will enable us to easily create and track new versions of our Cless containers. Each version will have a clear relationship to our overall service definition, giving us full visibility into what container versions are currently running as well as a history of how our service has evolved over time.

DataStructure Definition:

Previously we put the serverless resource into our ServiceDefinition object, which contained all the necessary attributes to start a container and keep track of it.

type ServiceDefinition struct {

gorm.Model

Name string `json:"name" gorm:"unique"`

ImageName string `json:"image_name"`

ImageTag string `json:"image_tag"`

Port int `json:"port"`

Host string `json:"host"`

}Instead we will extract the service container attributes into a new struct named ServiceVersion and add property EnvVars as datatypes.JSONSlice[string] , which will store a container environment variables like ENV=prod:

type ServiceVersion struct {

gorm.Model

ServiceDefinitionID uint `json:"service_definition_id" gorm:"index,references:ID"`

ImageName string `json:"image_name"`

ImageTag string `json:"image_tag"`

Port int `json:"port"`

EnvVars datatypes.JSONSlice[string] `json:"env_vars"`

}Now we need to establish a relationship of has_many between ServiceDefinition and ServiceVersion:

type ServiceDefinition struct {

gorm.Model

Name string `json:"name" gorm:"unique"`

Versions []ServiceVersion `json:"versions" gorm:"foreignKey:ServiceDefinitionID"`

Host string `json:"host"`

}Methods & Persistence:

Now that types has been clearly defined we will need to refactor the isValid method on ServiceDefinition and add same method to ServiceVersion:

func (sDef *ServiceDefinition) isValid() bool {

return (sDef.Name != "" && sDef.Name != "admin")

}

func (sVer *ServiceVersion) isValid() bool {

return sVer.ImageName != "" && sVer.ImageTag != "" && sVer.Port > 0

}Let's extend our repository definition to support persistence of new versions:

type ServiceDefinitionRepository interface {

GetAll() ([]ServiceDefinition, error)

GetByName(name string) (*ServiceDefinition, error)

GetByHostName(hostName string) (*ServiceDefinition, error)

Create(service ServiceDefinition) error

AddVersion(service *ServiceDefinition, version *ServiceVersion) error

}In our SqliteServiceDefinitionRepository we will implement the new function AddVersion, we use Gorm Associations to make managing relationship easier:

// AddVersion create new version and add it to the service

func (r *SqliteServiceDefinitionRepository) AddVersion(service *ServiceDefinition, version *ServiceVersion) error {

err := r.db.Model(service).Association("Versions").Append(version)

if err != nil {

return err

}

return nil

}Server API

We need a rest api for managing ServiceVersion similar to how we did before for ServiceDefinition, we need a function on ServiceDefinitionManager cause we don't want to be calling the repository directly from our http server route:

// AddVersion adds a new version to a service definition

func (m *ServiceDefinitionManager) AddVersion(

service *ServiceDefinition,

version *ServiceVersion,

) error {

m.mutex.Lock()

defer m.mutex.Unlock()

if !version.isValid() {

return errors.New("invalid service version")

}

err := m.repo.AddVersion(service, version)

if err != nil {

return err

}

return nil

}within StartAdminServer we will add ServiceVersion routes:

// add new version for a service definition

e.POST("/serviceDefinitions/:name/versions", func(c echo.Context) error {

name := c.Param("name")

service, err := manager.GetServiceDefinitionByName(name)

// bind version

version := new(ServiceVersion)

if err := c.Bind(version); err != nil {

return c.String(http.StatusBadRequest, err.Error())

}

if err == ErrServiceNotFound {

return c.String(http.StatusNotFound, err.Error())

}

if err := manager.AddVersion(service, version); err != nil {

return c.String(http.StatusBadRequest, err.Error())

}

return c.String(http.StatusCreated, "Version added")

})

// list versions for a service definition

e.GET("/serviceDefinitions/:name/versions", func(c echo.Context) error {

name := c.Param("name")

service, err := manager.GetServiceDefinitionByName(name)

if err == ErrServiceNotFound {

return c.String(http.StatusNotFound, err.Error())

}

return c.JSON(http.StatusOK, service.Versions)

})As you can see with minimal refactoring we were able to incorporate versions in cLess, but that is only like one third of the story we still need a way to promote new versions to be served, and that's why we will need a concept for the proxy to know which version to serve.

Version Promotion (Canary, A/B & more):

We will implement powerful versioning capabilities that go beyond just pinning a versionID to ServiceDefinition. We can actually split traffic between multiple versions with precise weighting - enabling advanced deployment patterns like canary testing. Rather than being limited to routing 100% of traffic to a single version, we can divide traffic across versions as needed - sending 1% to a new version for incremental testing, 50/50 split to compare A/B, or any ratio we choose. This unlocks fine-grained control over our version rollout strategy.

Traffic Weights:

Let's create a struct TrafficWeight that will contains traffic split definitions and reference it in ServiceDefinition:

type Weight struct {

ServiceVersionID uint `json:"service_version_id"`

Weight uint `json:"weight"`

}

type TrafficWeight struct {

gorm.Model

ServiceDefinitionID uint `json:"service_definition_id" gorm:"index,references:ID"`

Weights datatypes.JSONSlice[Weight] `json:"weights"`

}

type ServiceDefinition struct {

gorm.Model

Name string `json:"name" gorm:"unique"`

Versions []ServiceVersion `json:"versions" gorm:"foreignKey:ServiceDefinitionID"`

TrafficWeights []TrafficWeight `json:"traffic_weights" gorm:"foreignKey:ServiceDefinitionID"`

Host string `json:"host"`

}we will use a has_many relationship between ServiceDefinition and TrafficWeight. This provides full historical traceability of how traffic has been split across versions over time. If a particular traffic split causes issues, we can quickly revert back to a known good distribution. The history of TrafficWeights gives us an audit trail showing how traffic shifted as new versions rolled out. And it allows us to easily recreate previous traffic splitting strategies. This time-travel capability ensures risk-free rollbacks while freeing us to confidently experiment with different version promotion patterns. The combination of fine-grained traffic splitting and historical TrafficWeights gives us unparalleled control over our version rollout timeline.

Method for validating TrafficWeight:

func (tw *TrafficWeight) isValid() bool {

// sum of weights should be 100

sum := 0

for _, w := range tw.Weights {

sum += int(w.Weight)

}

return sum == 100

}Let's add persistence as well similar to what we did with ServiceVersion:

type ServiceDefinitionRepository interface {

GetAll() ([]ServiceDefinition, error)

GetByName(name string) (*ServiceDefinition, error)

GetByHostName(hostName string) (*ServiceDefinition, error)

Create(service ServiceDefinition) error

AddVersion(service *ServiceDefinition, version *ServiceVersion) error

AddTrafficWeight(service *ServiceDefinition, weight *TrafficWeight) error

}// AddTrafficWeight create new traffic weight and add it to the service

func (r *SqliteServiceDefinitionRepository) AddTrafficWeight(service *ServiceDefinition, weight *TrafficWeight) error {

err := r.db.Model(service).Association("TrafficWeights").Append(weight)

if err != nil {

return err

}

return nil

}To manage these traffic weights we will need a rest api and an AddTrafficWeight in our ServiceDefinitionManager:

// AddTrafficWeight adds a new traffic weight to a service definition

func (m *ServiceDefinitionManager) AddTrafficWeight(

service *ServiceDefinition,

weight *TrafficWeight,

) error {

m.mutex.Lock()

defer m.mutex.Unlock()

if !weight.isValid() {

return errors.New("invalid traffic weight")

}

err := m.repo.AddTrafficWeight(service, weight)

if err != nil {

return err

}

return nil

}// list traffic weights for a service definition

e.GET("/serviceDefinitions/:name/trafficWeights", func(c echo.Context) error {

name := c.Param("name")

service, err := manager.GetServiceDefinitionByName(name)

if err == ErrServiceNotFound {

return c.String(http.StatusNotFound, err.Error())

}

return c.JSON(http.StatusOK, service.TrafficWeights)

})

// add new traffic weight for a service definition

e.POST("/serviceDefinitions/:name/trafficWeights", func(c echo.Context) error {

name := c.Param("name")

service, err := manager.GetServiceDefinitionByName(name)

// bind traffic weight

weight := new(TrafficWeight)

if err := c.Bind(weight); err != nil {

return c.String(http.StatusBadRequest, err.Error())

}

if err == ErrServiceNotFound {

return c.String(http.StatusNotFound, err.Error())

}

if err := manager.AddTrafficWeight(service, weight); err != nil {

return c.String(http.StatusBadRequest, err.Error())

}

return c.String(http.StatusCreated, "Traffic weight added")

})Now that we have the ability to store and manage traffic weights, let's add the ability to use them. We will implement a method called ChooseVersion, which will help pick a version from the latest TrafficWeight of a ServiceDefinition.

// ChooseVersion randomly chooses a version based on the weights

// weight have the form of a slice of {version, weight} pairs

// sum of weights is always 100

// example: [{1, 50}, {2, 50}] means 50% of the traffic goes to version 1 and 50% to version 2

// example: [{1, 10}, {2, 20}, {3, 70}] means 10% of the traffic goes to version 1, 20% to version 2 and 70% to version 3

// example: [{1, 100}] means 100% of the traffic goes to version 1

// example: [{1, 50}, {2, 50}, {3, 50}] is invalid because the sum of weights is 150

// method needs to be concurrency safe

var randLock = &sync.Mutex{}

func (sDef *ServiceDefinition) ChooseVersion() uint {

randLock.Lock()

r := rand.Intn(101)

randLock.Unlock()

// grab latest of traffic weights

tw := sDef.TrafficWeights[len(sDef.TrafficWeights)-1]

for _, w := range tw.Weights {

r -= int(w.Weight)

if r <= 0 {

return w.ServiceVersionID

}

}

return 0

}We will see later how to use this method in main.go.

Container Manager:

With all these changes, minimal refactoring will also be needed in the DockerContainerManager. Currently it relies on ServiceDefinition, so instead we will create a struct called ExternalServiceDefinition, which will help integrate with other modules and keep the admin module loosely coupled with the container module.

type ExternalServiceDefinition struct {

Sdef *ServiceDefinition

Version *ServiceVersion

}

// method on ExternalServiceDefinition to create a unique key of the form <service_id>:<version_id>

func (sDef *ExternalServiceDefinition) GetKey() string {

return fmt.Sprintf("%d:%d", sDef.Sdef.ID, sDef.Version.ID)

}Now we just need to replace ServiceDefinition with ExternalServiceDefinition in DockerContainerManager, the change isn't hard, here is an example for GetRunningServiceForHost:

func (cm *DockerContainerManager) GetRunningServiceForHost(host string, version uint) (*string, error) {

log.Debug().Str("host", host).Msg("getting container")

sExternalDef, err := cm.sDefManager.GetExternalServiceDefinitionByHost(host, version)

log.Debug().Str("service definition", host).Msg("got service definition")

if err != nil {

return nil, err

}

cm.mutex.Lock()

defer cm.mutex.Unlock()

rSvc, exists := cm.containers[sExternalDef.GetKey()]

if !exists {

rSvc, err = cm.startContainer(sExternalDef)

if err != nil {

return nil, err

}

}

rSvc.LastTimeAccessed = time.Now()

if !cm.isContainerReady(rSvc) {

return nil, fmt.Errorf("container %s not ready", sExternalDef.Sdef.Name)

}

svcLocalHost := rSvc.GetHost()

return &svcLocalHost, nil

}Main.go

The only thing that will change in our main.go is the http handler:

func handler(w http.ResponseWriter, r *http.Request) {

// handle admin requests

if r.Host == admin.AdminHost {

proxyToURL(w, r, fmt.Sprintf("%s:%d", "localhost", admin.AdminPort))

return

}

svc, err := svcDefinitionManager.GetServiceDefinitionByHost(r.Host)

if err != nil {

log.Error().Err(err).Msg("Failed to get service definition")

w.Write([]byte("Failed to get service definition"))

return

}

svcVersion := svc.ChooseVersion()

log.Debug().Str("host", r.Host).Uint("service version", svcVersion).Msg("choosing service version")

svcLocalHost, err := containerManager.GetRunningServiceForHost(r.Host, svcVersion)

if err != nil {

log.Error().Err(err).Msg("Failed to get running service")

w.Write([]byte("Failed to get running service"))

return

}

log.Debug().Str("host", r.Host).Str("service localhost", *svcLocalHost).Msg("proxying request")

proxy := httputil.NewSingleHostReverseProxy(&url.URL{

Scheme: "http",

Host: *svcLocalHost,

})

proxy.ServeHTTP(w, r)

}Environment variables

We have already added the EnvVars property in ServiceVersion above, here we will cover how to add it to the container that is going to be started by the container manger:

// create container with docker run

func (cm *DockerContainerManager) createContainer(sExternalDef *admin.ExternalServiceDefinition, assignedPort int) (*RunningService, error) {

image := fmt.Sprintf("%s:%s", sExternalDef.Version.ImageName, sExternalDef.Version.ImageTag)

ctx := context.Background()

resp, err := cm.dockerClient.ContainerCreate(

ctx,

&container.Config{

Image: image,

Tty: false,

Env: sExternalDef.Version.EnvVars,

},

&container.HostConfig{

PortBindings: buildPortBindings(sExternalDef.Version.Port, assignedPort),

},

nil,

nil,

"",

)

if err != nil {

return nil, err

}

if err := cm.dockerClient.ContainerStart(ctx, resp.ID, types.ContainerStartOptions{}); err != nil {

return nil, err

}

rSvc := RunningService{

ContainerID: string(resp.ID),

AssignedPort: assignedPort,

Ready: false,

}

return &rSvc, nil

}Not a lot was needed as ServiceVersion has the same format for env variable as what's required by the go docker client api. And we just do a pass through to the container.Config.

Garbage Collection of Idle Containers

Since cLess is a serverless platform we should have a way to stop containers that are idle -which haven't received traffic for a specific amount of time; for instance, we can say that if containers haven't received traffic for 2 minutes we can garbage collect them.

for that we need a way to track the last time a RuningService has been accessed:

type RunningService struct {

ContainerID string // docker container ID

AssignedPort int // port assigned to the container

Ready bool // whether the container is ready to serve requests

LastTimeAccessed time.Time // last time the container was accessed

}the LastTimeAccessed property will keep track of last time the running service served traffic.

We will also need to keep it updated, we will do that in GetRunningServiceForHost, since that method gets called every time the service gets accessed:

func (cm *DockerContainerManager) GetRunningServiceForHost(host string, version uint) (*string, error) {

.....

cm.mutex.Lock()

defer cm.mutex.Unlock()

rSvc, exists := cm.containers[sExternalDef.GetKey()]

if !exists {

rSvc, err = cm.startContainer(sExternalDef)

if err != nil {

return nil, err

}

}

rSvc.LastTimeAccessed = time.Now()

.........

}Let's create a method on DockerContainerManager named garbageCollectIdleContainers, which will have an infinite loop with a time.Sleep between runs:

// garabge collect unused containers based on last time accessed

func (cm *DockerContainerManager) garbageCollectIdleContainers() {

for {

cm.mutex.Lock()

log.Info().Msg("Garbage collecting idle containers")

for key, rSvc := range cm.containers {

if time.Since(rSvc.LastTimeAccessed) > 1*time.Minute {

err := cm.dockerClient.ContainerKill(context.Background(), rSvc.ContainerID, "SIGKILL")

if err != nil {

log.Error().Err(err).Str("container name", key).Msg("Failed to kill container")

}

err = cm.dockerClient.ContainerRemove(context.Background(), rSvc.ContainerID, types.ContainerRemoveOptions{})

if err != nil {

log.Error().Err(err).Str("container name", key).Msg("Failed to remove container")

}

delete(cm.containers, key)

delete(cm.usedPorts, rSvc.AssignedPort)

}

}

cm.mutex.Unlock()

time.Sleep(80 * time.Second)

}

}We need to call this method without blocking when we create the singleton DockerContainerManger:

Testing Time:

For testing all the new features we added to cLess, we will create a simple python flask app:

from flask import Flask

import os

app = Flask(__name__)

@app.route('/')

def hello_world():

gretting = os.environ.get('GREETING', 'cLess')

return f'Hello, {gretting}!'we will skip the ceremony of packaging the app in a docker file and building the image. In case you need help with this you can get it at the github repo.

we will issue few curl commands to create service, versions:

curl -X POST -H "Content-Type: application/json" \

-d '{"name":"my-python-app"}' \

http://admin.cless.cloud/serviceDefinitions

curl -X POST -H "Content-Type: application/json" \

-d '{"image_name":"python-docker", "image_tag":"latest", "port":8080, "env_vars":["GREETING=Go"]}' \

http://admin.cless.cloud/serviceDefinitions/my-python-app/versions

curl -X POST -H "Content-Type: application/json" \

-d '{"image_name":"python-docker", "image_tag":"latest", "port":8080, "env_vars":["GREETING=Docker"]}' \

http://admin.cless.cloud/serviceDefinitions/my-python-app/versions

curl -X POST -H "Content-Type: application/json" \

-d '{"image_name":"python-docker", "image_tag":"latest", "port":8080, "env_vars":["GREETING=sqlite"]}' \

http://admin.cless.cloud/serviceDefinitions/my-python-app/versionsThe difference between all these versions is the env variable GREETING.

We can get the version IDs by listing versions:

curl -s http://admin.cless.cloud/serviceDefinitions/my-python-app/versions | jq '.[].ID'

6

7

8We will use these version IDs to create the traffic weight distribution:

curl -X POST -H "Content-Type: application/json" \

-d '{"weights":[{"service_version_id":6, "weight": 10}, {"service_version_id":7, "weight": 30}, {"service_version_id":8, "weight": 60}]}' \

http://admin.cless.cloud/serviceDefinitions/my-python-app/trafficWeightsThis will split traffic across 3 versions:

Version 6 (

GREETING=Go): 10% of traffic.Version 7 (

GREETING=Docker): 30% of traffic.Version 8 (

GREETING=sqlite): 60% of traffic.

Let's get the host assigned to this app, so we can test the distribution with a bash command:

curl -s http://admin.cless.cloud/serviceDefinitions/my-python-app | jq '.host'

"app-51.cless.cloud"

for i in {1..100}; do curl -s app-51.cless.cloud >> data.txt; echo "" >> data.txt; done

cat data.txt | sort | uniq -c

36 Hello, Docker!

8 Hello, Go!

56 Hello, sqlite!

We run 100 requests, and we can see that the distribution is pretty close to the traffic weight we set up.

In the logs we can also see the idle containers garbage collector at work:

{"level":"info","time":"2023-08-23T09:29:19-04:00","message":"Garbage collecting idle containers"}

{"level":"info","time":"2023-08-23T09:30:29-04:00","message":"Garbage collecting idle containers"}

{"level":"info","svc key":"2:6","containerID":"ab61f05eb96bcc31c3ce96270744b421aa1a11a9399cdda0fc2422c8ba38a83d","time":"2023-08-23T09:30:29-04:00","message":"Removing idle container"}

{"level":"info","svc key":"2:7","containerID":"7021c36041f51e053c596eb3da0527423fc84e3601fd6c2c23978605e8c7bc44","time":"2023-08-23T09:30:29-04:00","message":"Removing idle container"}

{"level":"info","svc key":"2:8","containerID":"0d1809adbc97bc3c9b5e42eb4187569131946dccd079150939064b1b7935c512","time":"2023-08-23T09:30:29-04:00","message":"Removing idle container"}and running docker ps confirm that no idle container is running.

Conclusion:

Versioning:

With versioning, we were able to iteratively improve our cLess platform while maintaining governance over our container portfolio.Upgrading becomes simplified as we can promote validated versions into production with minimal effort. Versioning unlocks new levels of agility, control and audit for our critical cLess applications.

Traffic Weights:

we can now validate new versions with a portion of live traffic before ramping up. Or roll back issues by shifting traffic away from faulty versions. This will give us the advanced tools to implement the versioning workflows that best suit our use case. The end result is lower risk deployments and greater application stability.

Idle Containers:

Now cLess provides an automatic garbage collector for idle containers, unlocking new optimization capabilities. When container instances are inactive for a specified time period, cLess will gracefully scale them to zero. This allows us to run lean - paying only for the compute we need at any given moment. As demand ramps up, cLess will seamlessly spin a new container to server traffic. The end result is maximized efficiency and minimized waste.

The full code is in part-4 of the github repo.