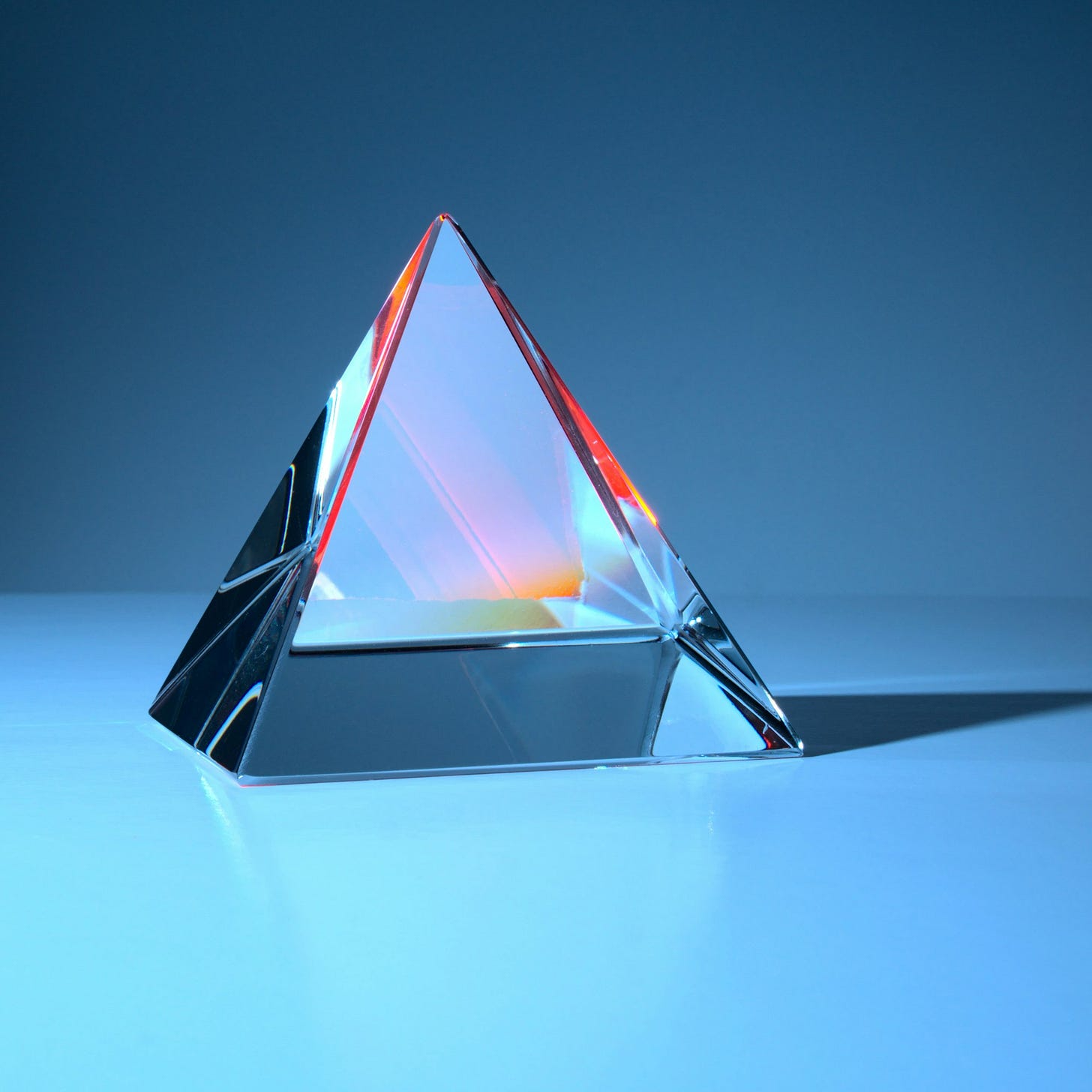

The System Resiliency Pyramid

Resilient systems are crucial for any organization aiming to provide reliable services. But what makes a system resilient?

Recently, I encountered the Code Review Pyramid by Gunnar Morling, and it gave me an idea of crafting a framework that can break system resiliency concepts, technique, and lifecycle into layers and answer important questions:

What makes a system resilient?

How to ensure a system is and stay resilient?

Why system resiliency is not a one and done problem?

The system resiliency pyramid provides a holistic framework for thinking about reliability across five key layers.

Infrastructure

At the base of the pyramid lays infrastructure which cover a plethora of physical hardware and facilities that systems run on. This includes servers, networks, data centers, power systems, and more. What redundancies are built into your infrastructure? Investing in redundant infrastructure improves resilience by preventing single points of failure.

There isn't an easy answer when it comes to infrastructure, and below are examples of questions that engineers can ask:

Does the system have sufficient redundancy?

Is there a backup strategy in place?

How resilient is the network setup against disruptions?

Are there any single points of failure in the infrastructure setup?

What happens if a datacenter or a cloud region was to be hit by a tornado?

System Design

System design in software engineering refers to the high-level architecture and structure of a software system. It involves making design choices and tradeoffs to meet functional and non-functional requirements.

Some key aspects of system design include:

Architecture - Determining the overall components of the system and how they interact. This includes choosing things like distributed vs monolithic architecture, client-server model, microservices, etc.

Partitioning - Breaking up the system into modules and components. This involves determining how to divide responsibilities and functionality.

Interfaces - Defining how components communicate and interact with each other through APIs, function calls, protocols, etc.

Scalability - Designing for growth in users, traffic, data volume, etc. This impacts things like horizontal vs vertical scaling.

Security - Incorporating mechanisms for access control, encryption, obfuscation, auditing, etc.

Reliability - Planning for resilience via redundancy, failover, graceful degradation, etc.

Performance - Optimizing speed, responsiveness, and efficiency.

Maintainability - Does the design allow for updates and changes without major disruptions/changes to the service?

The main goals are breaking the system into logical pieces, defining relationships and interactions, and making appropriate tradeoffs to satisfy functional needs as well as quality attributes like scalability, reliability, and performance. This high-level blueprint guides lower-level implementation.

Data

When it comes to data there is no easy answer on what should be done; however, multiple question can be asked to ensure zero surprises, cause data surprises do not just mean lack of resiliency, but it can mean the end of a business all together.

Below is a list of questions, the list is not exhaustive, but it provide a must answer before you embark on writing resilient software:

Should data be replicated in multiple locations: hosts, regions, clouds?

Are there mechanisms in place to ensure the atomicity and consistency of transactions?

How does the system handle conflicts?

What safeguards are in place to protect data integrity?

Are there any risks of data loss?

What database technology should we use? (the $1M question)

Fault Tolerance

While foundational fault tolerance capabilities are established at the system design layer, many organizations fail to thoroughly incorporate resilience mechanisms into their architectures.

Without proper upfront consideration, fault tolerance easily becomes an afterthought. This lack of rigorous planning and design for reliability can undermine system robustness when disruptions occur.

To build truly resilient systems, fault tolerance concepts like redundancy, automated failover, degradation, and retry need to be deeply incorporated into the initial system design.

Too often, teams retrofit availability patterns late in development or after launch. Architecting for reliability from the start results in cohesive systems that gracefully handle inevitable crashes and overload conditions.

By elevating fault tolerance as a first-class concern early in design, organizations can enhance system resiliency and minimize the impact of outages on customers.

Questions:

What failures or faults are most likely to occur?

How can we design redundancy into the system? Examples: duplicate servers, hot standbys, multi-region deployments.

How will the system degrade gracefully when overloaded?

Can we shed non-essential work?

How will the system handle component failures? Do we need health checks and auto-restart?

What kind of automated failover capability is needed? Active-passive? Active-active?

How can we isolate faults to prevent cascading failures across components?

What fallbacks/defaults can we implement for when parts of the system fail?

How can we implement retries/backoff for transient errors?

Should we implement deadlines to avoid doing unnecessary work?

How will system integrity be protected if a corrupted component needs to be terminated?

How can system changes and updates be made without downtime?

Tests & Observability

How do you validate resilience?

How quickly can issues be diagnosed and resolved?

A comprehensive testing strategy incorporates validation at all levels of the system, from developer machine to customer experience:

Comprehensive testing across layers validates robustness; for instance, unit, integration, e2e, and functional testing reduce exposure to bugs.

Performance and load testing can help identify bottlenecks.

Chaos engineering purposefully injects failures to verify resilience, and validate assumptions about the system resilience.

Robust monitoring and observability tooling provides real-time visibility into system health, enabling teams to rapidly detect and diagnose anomalies. Configurable alerts notify engineers when key performance indicators exceed acceptable thresholds, prompting investigation and mitigation before outages impact users.

Comprehensive observability platforms track essential system metrics, aggregate log data, and monitor health indicators across components. Automated alerts trigger when predefined performance boundaries are crossed, allowing SRE teams to proactively intervene if an issue arises. Correlating metrics and logs facilitates rapid root cause analysis of problems. Dashboards offer at-a-glance views of reliability metrics to assess system status. By leveraging monitoring and observability tooling, organizations can gain deep visibility into system health and ensure system resilience is as good as it should be.

Conclusion:

The system resiliency pyramid provides a framework for thinking holistically about reliability. Following resilient design principles across the key layers leads to systems that can better withstand stress, disruption, and failure. This reduces downtime and protects customers from the chaos of the real world. How resilient are your systems?

If you enjoyed this, you will also enjoy all the content we have in the making!