Achieving Fault Tolerance: Strategies for Building Reliable Systems

Series: Principles of Reliable Software Design

Building distributed systems that are resilient in the face of inevitable failures is a fundamental challenge engineers face. When software is deployed across networks and servers, faults are no longer an exception but a given. Hardware can fail, networks can partition, and entire data centers can go offline. As complexity increases, so too do the potential failure points.

That's where fault tolerance comes in. Fault tolerance is the ability for a system to continue operating properly even when components fail. It focuses on handling faults gracefully and aiming for high availability despite disruptions. Fault tolerant systems are able to withstand storms by strategically handling points of failure.

In this post, we’ll explore common sources of failure, strategies for fault tolerance, and tools that help tame the chaos. By the end, you’ll understand critical techniques for architecting resilient distributed systems that stand the test of time and turbulence. This is indispensable knowledge for any engineer working on production-grade software, especially in fields like web services, databases, and cloud infrastructure.

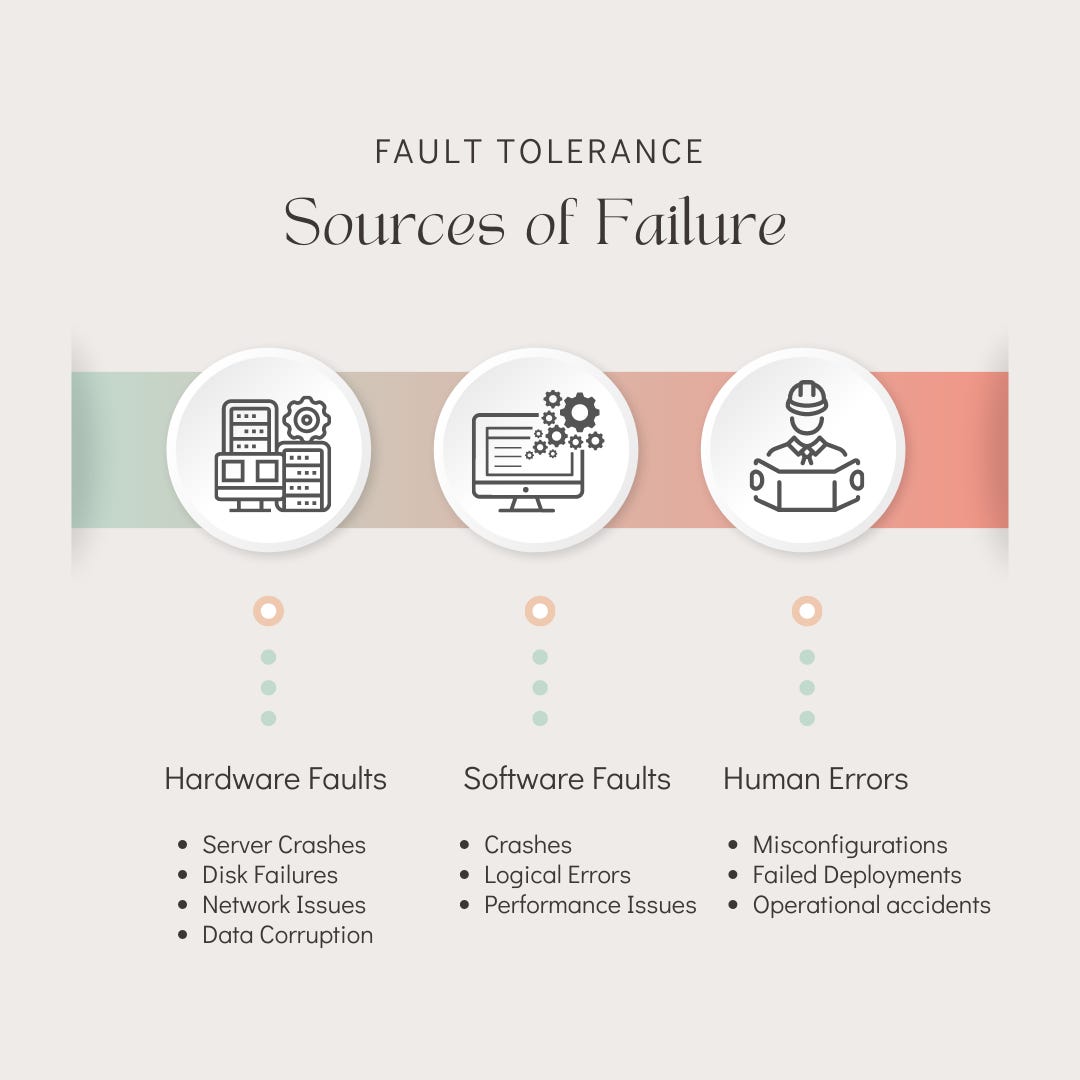

Sources of Failure

Let's first look at why systems fail and the common sources of failures. This can often be traced back to hardware malfunctions, software bugs, human errors, or a combination of these factors. Understanding these underlying causes is essential in designing robust systems that can resist failure and function effectively even in challenging conditions.

Hardware faults - Physical components can fail in a number of ways:

Server crashes: Power outage, hardware malfunction, overheating

Disk failures: RAID systems can mitigate but disks may still fail

Network issues: Packets dropped, latency spikes, disconnects

Data corruption: Cosmic rays, signal noise, worn out media

Software faults - Bugs in code can also disrupt systems:

Crashes - Unhandled exceptions, infinite loops, deadlock

Logical errors - Race conditions, invalid state transitions

Performance issues - Memory leaks, data spikes, blocking calls

Human errors - People managing systems can also make mistakes:

Misconfigurations - Wrong settings applied inconsistently

Failed deployments - Unintended side effects, service disruptions

Operational accidents - Accidental actions, insufficient safeguards

Strategies for Fault Tolerance

There are many strategies that can help build fault tolerance into distributed systems.

Redundancy

Redundancy aims to remove single points of failure by providing spare capacity that can take over if any component fails. This can be implemented across servers, networks, data stores, and geographic regions. The key is avoiding both hardware and data loss with no single point of failure.

Active-passive redundancy: Run backup servers/components idle until needed

Active-active redundancy: Spread load across active primary and backups.

Replication: Maintain multiple copies of data distributed across nodes.

Load balancing: Distribute requests across multiple servers.

Spare capacity: Extra network links, servers, storage space.

Hot swaps: Replace failed components without downtime.

Failover: Automatically switch to backup if primary fails.

Rollback: Revert to last known good state if error detected.

For an in-depth resource of redundancy, we have composed a deep dive that encompasses everything you need to know.

Error Detection

The focus here is on actively monitoring the system to catch errors quickly before they cascade. This allows failing over to redundancy and isolating problems early. Testing components for sanity and health are important ways to apply error detection across a system.

Health checks: Monitor system metrics and test key component functionality.

Heartbeat messages: Nodes ping each other frequently to check availability.

Alerting: Get notified when key metrics breach safe thresholds.

Failure detectors: Algorithms that reliably detect crashed nodes.

Sanity checks: Validate outputs and internal state for consistency.

Logging: Record enough debug info to diagnose faults post-mortem.

Synthetic monitoring: Simulate user transactions to proactively find issues.

Graceful Degradation

The goal of graceful degradation is to keep the system operational even if functionality is selectively limited during issues. This focuses on defining essential vs non-essential operations and establishing tactics to protect critical functions when failures occur.

Load shedding: Drop less important requests when overloaded.

Priority queues: Rank tasks and process higher priority ones first.

Retries with backoff: Gradually wait longer between retries on failure.

Progressive enhancement: Support basic functionality first, then enhance.

Feature degradation: Define min viable mode and shed unused features if needed.

Isolation:

The goal of isolation is to limit the spread and impact of any given failure. This is done by designing decoupled components, safe failure modes, and operational boundaries that prevent localized issues from taking down the whole system.

Bulkheads: Separate components to contain failures

Circuit breakers: Disable problematic endpoints to stop cascading failures.

Rate Limiting: Automatically restrict resource usage to protect critical work.

Sandboxing: Separate untested new code from production environment.

Fault containment: Design explicit failure domains for requests.

Pooling: Reuse a limited set of resources instead of unbounded creation.

Performance isolation: Contain and control heavy loads and congestion.

Some examples include sandboxing risky code, performance isolation, and designing microservices that fail without impacting others. Isolation is a powerful technique for mitigating the blast radius from disruptions.

Tools and Frameworks

There are many tools and frameworks that provide implementation support for fault tolerance strategies. At the code level, languages and libraries have abstractions like exceptions, promises, and supervision trees. These make it easier to write robust components and quickly handle errors.

Languages and libraries

Java - Exception handling with try/catch. Robust libraries like Reslience4j for circuit breakers, retries, bulkhead, and rate limiting.

Go:

Recover()for panic handling, and libraries like hystrix-go, and goresilience.Feature Flags: helps with sandboxing and available for most main languages. For example, OpenFeature, Unleash, and Flipit.

Infrastructure: Especially with the cloud, it is easier to Manage redundancy & isolation through the usage of auto-scaling groups, load balancers, availability zones.

Testing:

Chaos engineering & fault injection: Chaos testing tools purposefully inject failures to ensure systems gracefully handle disruptions. This is a powerful technique for building confidence.

E2E Testing: tools like playwright makes it so easy to write end to end tests to continuously validate system still working as expected.

Conclusion

There are a few key takeaways from our exploration of fault tolerance:

Fault tolerance enables reliability. By planning for inevitable failures, systems can continue operating properly despite disruptions. This resilience is essential for distributed systems.

Combining strategies is most effective. Redundancy, error detection, graceful degradation, and isolation complement each other when used together. Diverse techniques are needed for diverse faults.

Holistic design brings fault tolerance to life. From architecture to deployment to monitoring, make fault tolerance a first-class concern across the entire software lifecycle.

Operational excellence never ends. Keep learning from incidents, evolving designs, and testing assumptions to adapt to emerging failure modes.

Fault tolerant thinking provides a foundation for reliable distributed systems. By focusing on resilience up front, your software can withstand the chaos as it scales over time. Use these learnings to architect systems that embrace faults as facts of life.

If you enjoyed this, you will also enjoy all the content we have in the making!