Scaling Software Systems: 10 Key Factors

As part of my 12-part series on the Principles of Reliable Software Design, this post will focus on scalability - one of the most critical elements in building robust, future-proof applications.

In today's world of ever-increasing data and users, software needs to be ready to adapt to higher loads. Neglecting scalability is like constructing a beautiful house on weak foundations - it may look great initially but will eventually crumble under strain.

Whether you're building an enterprise system, mobile app or even something for personal use, how do you ensure your software can smoothly handle growth? A scalable system provides a great user experience even during traffic spikes and high usage. An unscalable app is frustrating at best and at worst, becomes unusable or crashes altogether under increased load.

In this post, we'll explore 10 areas that are key to designing highly scalable architectures. By mastering these concepts, you can develop software capable of being deployed on a large scale without expensive rework. Your users will thank you for building an app that delights them today as much as it will tomorrow when your user base has grown 10x.

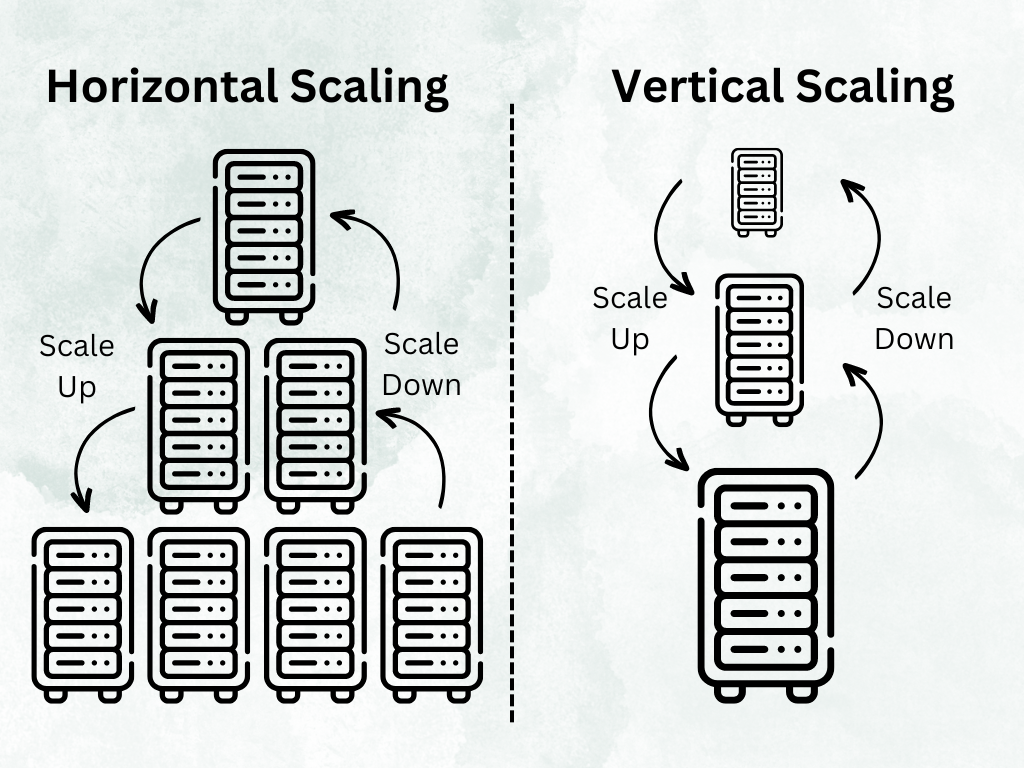

Horizontal vs. Vertical Scaling

One of the first key concepts in scalability is understanding the difference between horizontal and vertical scaling. Horizontal scaling means increasing capacity by adding more machines or nodes to your system. For example, adding more servers to support increased traffic to your application.

Vertical scaling involves increasing the power of existing nodes, such as upgrading to servers with faster CPUs, more RAM, or increased storage capacity.

In general, horizontal scaling is preferred because it provides greater reliability through redundancy. If one node fails, other nodes can take over the workload. Horizontal scaling also offers more flexibility to scale out gradually as needed. With vertical scaling, you need to upgrade your hardware altogether to handle increased loads.

However, vertical scaling may be useful when increased computing power is needed for specific tasks like CPU-intensive data processing. Overall, a scalable architecture employs a combination of vertical and horizontal scaling approaches to tune the system resource requirements over time.

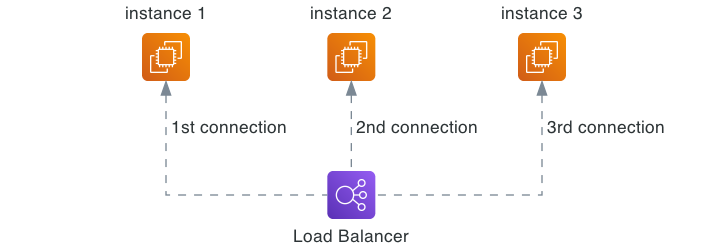

Load Balancing

Once you scale horizontally by adding servers, you need a way to distribute requests and traffic evenly across these nodes. This is where load balancing comes in. A load balancer sits in front of your servers and routes incoming requests to them efficiently.

This prevents any single server from becoming overwhelmed. The load balancer can implement different algorithms like round-robin, least connections, or IP-hash to determine how to distribute load. More advanced load balancers can detect server health and adaptively shift traffic away from failing nodes.

Load balancing maximizes resource utilization and increases performance. It also provides high availability and reliability. If a server goes down, the load balancer redirects traffic to the remaining online servers. This redundancy makes your system resilient to individual server failures.

Implementing load balancing alongside auto-scaling allows your system to scale out smoothly and painlessly. Your application can comfortably handle large traffic variations without running into capacity issues.

Database Scaling

As your application usage grows, the database backing your system can become a bottleneck. There are several techniques to scale databases to meet high read/write loads. However, databases are one of the hardest components to scale in most systems.

Database Selection:

The selection of an appropriate database plays a critical role in effectively scaling a database system. It depends on various factors, including the type of data to be stored and the expected query patterns. Different types of data, such as metrics data, logs, enterprise data, graph data, and key/value stores, have distinct characteristics and requirements that demand tailored database solutions.

For metrics data, where high write-throughput is essential to record time-series data, a time-series database like InfluxDB or Prometheus may be more suitable due to their optimized storage and querying mechanisms. On the other hand, for handling large volumes of unstructured data, such as logs, a NoSQL database like Elasticsearch or could provide efficient indexing and searching capabilities.

For enterprise data that requires strict ACID (Atomicity, Consistency, Isolation, Durability) transactions and complex relational querying, a traditional SQL database like PostgreSQL or MySQL might be the right choice. In contrast, for scenarios demanding simple read and write operations, a key/value store such as Redis or Cassandra could offer low-latency data access.

It's essential to thoroughly evaluate the specific requirements of the application and its data characteristics before making a database choice. Sometimes, a combination of databases (polyglot persistence) might be the most effective strategy, utilizing different databases for different parts of the application based on their strengths. Ultimately, the right database selection can significantly impact the scalability, performance, and overall success of the system.

Vertical Scaling:

Simply throwing more resources at a single database server like CPU, memory and storage can provide temporary relief for increased loads. And it should always be tried out before looking into advanced concepts of scaling the database. In addition, vertical scaling keeps your database stack simple.

However, there is a physical ceiling to how large a single server can scale. Also, a monolithic database remains a single point of failure - if that beefed up server goes down, so does access to the data.

That's why alongside vertical scaling of the database server hardware, it's critical to employ horizontal scaling techniques.

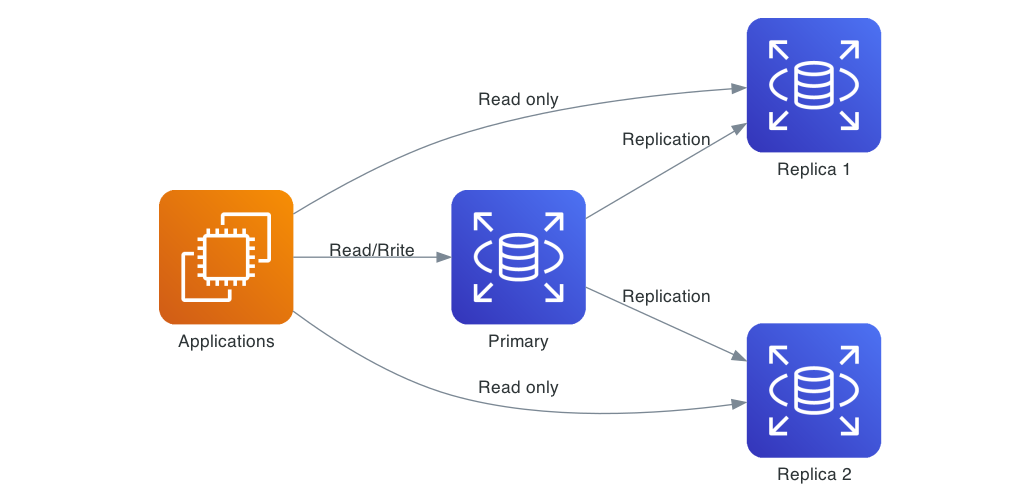

Replication:

Replication provides redundancy and improves performance by copying data across multiple database instances. A write to the leader node is replicated to read replicas. Reads can be served from the replicas, reducing load on the master. Also, replication copies data across redundant servers, eliminating the single point of failure risk.

Sharding:

Sharding partitions your database across multiple smaller servers, allowing you to add more nodes fluidly as needed.

Sharding or partitioning involves splitting your database into multiple smaller databases by a certain criteria like customer ID or geographic region. This allows you to scale horizontally by adding more database servers.

In addition, there other areas that should also be put under light that can help scale database:

- Schema Denormalization involves the duplication of data in a database to diminish the need for complex joins in queries, resulting in improved query performance.

- Caching frequently accessed data in a fast in-memory cache reduces database queries. A cache hit avoids having to fetch the data from the slower database.

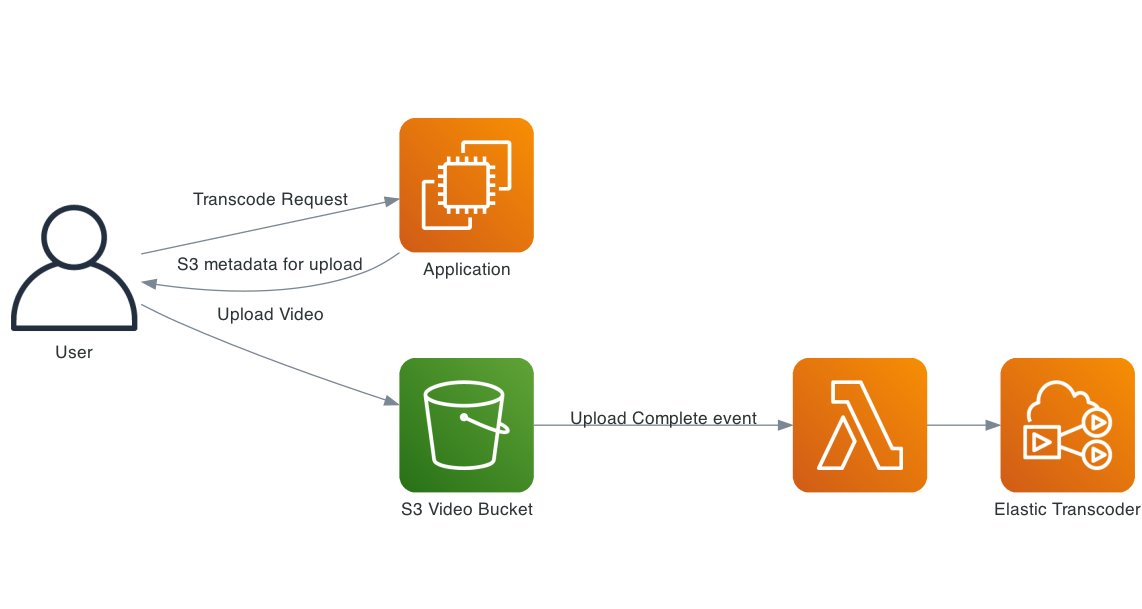

Asynchronous Processing

Synchronous request-response cycles can create bottlenecks that impede scalability, especially for long running or IO-intensive tasks. Asynchronous processing queues up work to be handled in the background, freeing up resources immediately for other requests.

For example, submitting a video transcoding job could directly block a web request, negatively impacting user experience. Instead, the transcoding task can be published to a queue and handled asynchronously. The user gets an immediate response, while the task processes separately.

Asynchronous tasks can be executed concurrently by background workers scaled horizontally across many servers. Queue sizes can be monitored to add more workers dynamically. Load is distributed evenly, preventing any single worker from becoming overwhelmed.

Shifting workloads from synchronous to asynchronous allows the application to handle spikes in traffic smoothly without getting bogged down. Systems remain responsive under load using robust queue-based asynchronous processing.

Stateless Systems

Stateless systems are easier to horizontally scale out compared to stateful designs. When application state is persisted in external storage like databases or distributed caches rather than locally on servers, new instances can be spun up as needed.

In contrast, stateful systems require sticky sessions or data replication across instances. A stateless application places no dependency on specific servers. Requests can be routed to any available resource.

Saving state externally also provides better fault tolerance. The loss of any stateless application server is not impactful since it holds no un-persisted critical data. Other servers can seamlessly take over processing.

A stateless architecture improves reliability and scalability. Resources can scale elastically while remaining decoupled from individual instances. However, external state storage adds overhead of cache or database queries. The tradeoffs require careful evaluation when designing web-scale applications.

Caching

Caching frequently accessed data in fast in-memory stores is a powerful technique to optimize scalability. By serving read requests from low latency caches, you can dramatically reduce load on backend databases and improve performance.

For example, product catalog information that rarely changes is ideal for caching. Subsequent product page requests can fetch data from Redis or Memcached rather than overloading your MySQL store. Cache invalidation strategies help keep data consistent.

Caching also benefits compute-heavy processes like template rendering. You can cache the rendered output and bypass redundant rendering for each request. CDNs like Cloudflare cache and serve static assets like images, CSS, and JS globally.

Redis Golang Example:

package main

import (

"database/sql"

"encoding/json"

"fmt"

"log"

"net/http"

"time"

"github.com/go-redis/redis"

_ "github.com/go-sql-driver/mysql"

)

const (

dbUser = "your_mysql_username"

dbPassword = "your_mysql_password"

dbName = "your_mysql_dbname"

redisAddr = "localhost:6379"

)

type Product struct {

ID int `json:"id"`

Name string `json:"name"`

Price int `json:"price"`

}

var db *sql.DB

var redisClient *redis.Client

func init() {

// Initialize MySQL connection

dbSource := fmt.Sprintf("%s:%s@/%s", dbUser, dbPassword, dbName)

var err error

db, err = sql.Open("mysql", dbSource)

if err != nil {

log.Fatalf("Error opening database: %s", err)

}

// Initialize Redis client

redisClient = redis.NewClient(&redis.Options{

Addr: redisAddr,

Password: "", // No password set

DB: 0, // Use default DB

})

// Test the Redis connection

_, err = redisClient.Ping().Result()

if err != nil {

log.Fatalf("Error connecting to Redis: %s", err)

}

log.Println("Connected to MySQL and Redis")

}

func getProductFromMySQL(id int) (*Product, error) {

query := "SELECT id, name, price FROM products WHERE id = ?"

row := db.QueryRow(query, id)

var product Product

err := row.Scan(&product.ID, &product.Name, &product.Price)

if err != nil {

return nil, err

}

return &product, nil

}

func getProductFromCache(id int) (*Product, error) {

productJSON, err := redisClient.Get(fmt.Sprintf("product:%d", id)).Result()

if err == redis.Nil {

// Cache miss

return nil, nil

} else if err != nil {

return nil, err

}

var product Product

err = json.Unmarshal([]byte(productJSON), &product)

if err != nil {

return nil, err

}

return &product, nil

}

func cacheProduct(product *Product) error {

productJSON, err := json.Marshal(product)

if err != nil {

return err

}

key := fmt.Sprintf("product:%d", product.ID)

return redisClient.Set(key, productJSON, 10*time.Minute).Err()

}

func getProductHandler(w http.ResponseWriter, r *http.Request) {

productID := 1 // For simplicity, we are assuming product ID 1 here. You can pass it as a query parameter.

// Try getting the product from the cache first

cachedProduct, err := getProductFromCache(productID)

if err != nil {

http.Error(w, "Failed to retrieve product from cache", http.StatusInternalServerError)

return

}

if cachedProduct == nil {

// Cache miss, get the product from MySQL

product, err := getProductFromMySQL(productID)

if err != nil {

http.Error(w, "Failed to retrieve product from database", http.StatusInternalServerError)

return

}

if product == nil {

http.Error(w, "Product not found", http.StatusNotFound)

return

}

// Cache the product for future requests

err = cacheProduct(product)

if err != nil {

log.Printf("Failed to cache product: %s", err)

}

// Respond with the product details

json.NewEncoder(w).Encode(product)

} else {

// Cache hit, respond with the cached product details

json.NewEncoder(w).Encode(cachedProduct)

}

}

func main() {

http.HandleFunc("/product", getProductHandler)

log.Fatal(http.ListenAndServe(":8080", nil))

}

Strategically leveraging caching reduces strain on infrastructure and scales horizontally as you add more cache servers. Caching works best for read-heavy workloads with repetitive access patterns. It provides scalability gains alongside database sharding and asynchronous processing.

Network Bandwidth Optimization

For distributed architectures spread across multiple servers and regions, optimizing network bandwidth utilization is key to scalability. Network calls can become a bottleneck, imposing limits on throughput and latency.

Bandwidth optimization techniques like compression and caching reduce the number of network hops and amount of data transferred. Compressing API and database responses minimizes bandwidth needs.

Persistent connections via HTTP/2 allow multiple requests over one open channel. This reduces round trip overheads, improves resource utilization, and avoid HTTP head of line blocking. However, HTTP/2 still suffers from TCP head of line blocking. So, we can now even use HTTP/3 which is being done over QUIC instead of TCP and TLS, and it avoid TCP head of line blocking.

CDN distribution brings data closer to users by caching assets at edge locations. By serving content from nearby, less data traverses costly long-haul routes.

Gzip Golang Example:

package main

import (

"github.com/labstack/echo/v4"

"github.com/labstack/echo/v4/middleware"

)

func main() {

e := echo.New()

// Middleware

e.Use(middleware.Logger())

e.Use(middleware.Recover())

e.Use(middleware.Gzip()) // Add gzip compression middleware

// Routes

e.GET("/", helloHandler)

// Start server

e.Logger.Fatal(e.Start(":8080"))

}

func helloHandler(c echo.Context) error {

return c.String(200, "Hello, Echo!")

}

Overall, scaling requires a holistic view encompassing not just compute and storage, but also network connectivity. Optimizing bandwidth usage by minimizing hops, compression, caching and more is invaluable for building high-throughput and low-latency large-scale systems.

Progressive Enhancement

Progressive enhancement is a strategy that helps improve scalability for web applications. The idea is to build the core functionality first and then progressively enhance the experience for capable browsers and devices.

For example, you can develop the basic HTML/CSS site to ensure accessibility on any browser. Then you can add advanced CSS and JavaScript to incrementally improve interactions for modern browsers with JS support.

Serving basic HTML first provides a fast “time-to-interactive” and works on all platforms. Enhancements load afterwards to optimize the experience without blocking. This balanced approach extends reach while utilizing capabilities. For example, Qwik bake this concept into the foundation of the framework.

Progressively enhancing in phases also aids scalability. Simple pages require fewer resources and scale better. You can add more advanced features when needed rather than prematurely over-engineering for every possible use case upfront.

Overall, progressive enhancement allows web apps to scale efficiently right from basic to advanced functionality based on device capabilities and user needs.

Graceful Degradation

In contrast to progressive enhancement, graceful degradation involves starting from an advanced experience and scaling back features when constraints are detected. This allows applications to scale down fluidly when facing resource limitations.

For instance, a graphically-rich app may detect a low-powered mobile device and adapt to downgrade advanced visuals into a more basic presentation. Or a backend system may throttle non-essential operations during peak load to maintain core functionality.

Gracefully degrading preserves critical user workflows even under suboptimal conditions. Errors due to constraints like bandwidth, device capabilities or traffic spikes are minimized. The experience remains operational rather than failing catastrophically.

Feature degradation is a valuable tool that should be incorporated and planned for during the initial development of product features. The ability to deactivate features automatically or manually can prove essential in keeping the system functional under various circumstances, such as system overload, migrations, or unexpected performance issues.

When a system experiences high load or is overwhelmed by excessive traffic, dynamically deactivating non-critical features can alleviate strain and prevent complete service failures. This smart use of feature degradation ensures that the core functionalities remain operational and prevents cascading failures across the application.

During database migrations or updates, feature degradation can help maintain system stability. By temporarily disabling certain features, the complexity of the migration process can be reduced, minimizing the risk of data inconsistencies or corruption. Once the migration is complete and verified, the features can be reactivated seamlessly.

Moreover, feature degradation can be a useful mechanism in situations where a critical bug or security vulnerability is discovered in a specific feature. Turning off the affected feature promptly can prevent any further damage while the issue is being addressed, ensuring the overall system's integrity.

Overall, incorporating feature degradation as part of the product's design and development strategy empowers the system to gracefully handle challenging situations, enhance resilience, and maintain an uninterrupted user experience during adverse conditions.

Building in graceful degradation mechanisms like device detection, performance monitoring and throttling improves an application's resilience when scaling up or down. Resources can be dynamically tuned to optimal levels based on real-time constraints and priorities.

Code Scalability

Scalability best practices focus heavily on infrastructure and architecture. But well-written and optimized code is key for scaling too. Suboptimal code hinders performance and resource utilization even on robust infrastructure.

Tight loops, inefficient algorithms and poorly structured data access can bog down servers. Architectures like microservices increase parallelism, but can multiply these inefficiencies.

Code profilers help identify hot spots and bottlenecks. Refactoring code to scale better optimizes CPU, memory and I/O resource usage. Distributing processing across threads also improves utilization of multi-core servers.

Example of Unscalable Code (Thread per request):

Inefficient code can hinder scalability even on robust infrastructure. For instance, allocating one thread per request does not scale well - the server will run out of threads under high load.

Better approaches like asynchronous/event-driven programming and non-blocking I/O provide higher scalability. Node.js handles many concurrent requests efficiently on a single thread using this model.

Virtual threads or goroutines are also more scalable than thread pools. Virtual threads are lightweight and managed by the runtime. Examples are goroutines in Go and green threads in Python.

Hundreds of thousands of goroutines can run concurrently vs limited OS threads. The runtime multiplexes goroutines onto real threads automatically. This removes thread lifecycle overhead and resource constraints of thread pools.

Carefully structured code that maximizes asynchronous processing, virtual threads, and minimized overhead is vital for large-scale applications, despite infrastructure.

Java Example of Virtual Thread Per Task:

import java.io.*;

import java.net.ServerSocket;

import java.net.Socket;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class VirtualThreadServer {

public static void main(String[] args) {

final int portNumber = 8080;

try {

ServerSocket serverSocket = new ServerSocket(portNumber);

System.out.println("Server started on port " + portNumber);

ExecutorService executor = Executors.newVirtualThreadPerTaskExecutor();

while (true) {

// Wait for a client connection

Socket clientSocket = serverSocket.accept();

System.out.println("Client connected: " + clientSocket.getInetAddress());

// Submit the request handling task to the virtual thread executor

executor.submit(() -> handleRequest(clientSocket));

}

} catch (IOException e) {

e.printStackTrace();

}

}

static void handleRequest(Socket clientSocket) {

try (

BufferedReader in = new BufferedReader(new InputStreamReader(clientSocket.getInputStream()));

PrintWriter out = new PrintWriter(clientSocket.getOutputStream(), true)

) {

// Read the request from the client

String request = in.readLine();

// Process the request (you can add your custom logic here)

String response = "HTTP/1.1 200 OK\r\nContent-Type: text/html\r\n\r\nHello, this is a virtual thread server!";

// Send the response back to the client

out.println(response);

// Close the connection

clientSocket.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

Notes if you want to run the code above, make sure you have java 20 installed, copy the code into VirtualThreadServer.java and run it using java --source 20 --enable-preview VirtualThreadServer.java.

Just as infrastructure needs to scale, so does code. Efficient code ensures servers operate optimally under load. Overloaded servers cripple scalability, irrespective of the surrounding architecture. Optimize code alongside scaling infrastructure for best results.

Conclusion

Scaling a software system to handle growth is crucial for long-term success. We've explored key techniques like horizontal scaling, load balancing, database sharding, asynchronous processing, caching, and optimized code to design highly scalable architectures.

While scaling requires continual effort, investing early in scalability will prevent painful bottlenecks down the road. Consider your capacity needs well in advance rather than as an afterthought. Build redundancies, monitor usage, expand incrementally, and distribute load across many nodes.

With a robust and adaptive design, your software can continue delighting customers even as usage explodes 10x or 100x. Planning for scale will distinguish your application from the multitudes that crash under growth. Your users will stick around when your platform remains just as fast, available and reliable despite increasing demand.

If you enjoyed this, you will also enjoy all the content we have in the making!

Member discussion