SLOs Are Overrated

The SRE industry is obsessed with SLOs. We've built an entire subindustry, filled conferences, engineered sophisticated systems, and staffed fully dedicated teams to maintain them.

SLOs an integral part of Google's Site Reliability Engineering book, which many consider to be the SRE Bible. But just because they are part of Google's methodology doesn't mean they are the best fit for every team or organization.

The ultimate goal of business and software engineering is delivering value to customers and keeping customers happy, not meeting abstract targets. So let's take a step back and discuss areas where I feel our relationship with SLOs can be improved.

Maintenance Overhead

Defining, measuring, monitoring and maintaining SLOs comes at a cost. Robust SLO implementations require dedicated infrastructure, tooling and processes:

- Instrumentation to properly measure and collect SLIs

- Custom dashboards to display SLO metrics and status.

- Specialized monitoring software (e.g. to calculate burn rate).

- Alarm and notification systems to trigger alerts for SLO violations.

- Oncall training to respond to threshold breaches.

- Regular analysis to identify trends.

- Periodic reevaluation of SLO appropriateness and levels.

If you don't have unlimited resources, consider simplifying the overall approach. Focus on minimal viable SLOs tied to truly critical user journeys. Leverage existing metrics and monitoring where possible (e.g. 4 golden signals). Document SLOs through specs rather than fancy dashboards.

The goal is improving real user experiences, not creating metrics for their own sake. Prioritize simplicity over comprehensive SLO implementations. Weigh the costs against the benefits. Keep SLOs lightweight, pragmatic and focused on outcomes that matter.

Defining Appropriate SLOs is Challenging

Metrics alone can miss the full picture of how systems affect people's lives. In the world of job search for example, when system is not available or degraded thousands of job seekers won't be able to list and apply for jobs. This could have real consequences like missed opportunities, financial hardship, and elevated stress levels. An SLO focused narrowly on uptime or load times would ignore these human costs.

For SLOs to be meaningful, they need to be set at reasonable and achievable levels. Setting them too high makes them useless, while too low overwhelms teams. Coming up with the "right" objectives requires deep knowledge of systems and user expectations.

Increased Pressure on Teams

The intense pressure to continually review dashboards and meet numeric targets also imposes strain on engineers and on-call responders. This constant stress increases burnout risk. Similarly, the overhead of frequent tuning cycles steals focus from product work.

Additionally, SLOs are designed to focus engineering effort towards measurable outcomes. However, this can sometimes incentivize teams to "game" metrics by optimizing for what's quantifiable vs other business goals. For example, a customer support SLO focused on response time could lead to rushed but low-quality responses.

In the quest to hit SLOs, we can't lose sight of more human considerations like sustainable operational practices and work-life balance. At times it can feel like being a hamster on a wheel, running relentlessly towards targets without true progress.

Complacency Risk

Hitting all SLO targets could provide a false sense of security and achievement. Without continuing to raise the bar, teams could stagnate. SLOs focus on minimum acceptable levels, not continuous improvement.

For example, an e-commerce site could have an SLO of 99% uptime and meet it consistently every month. But that allows for over 7 hours of downtime per month, which could be devastating during peak sales seasons. Simply maintaining 99% uptime stops short of exploring how to minimize outages further. There is no incentive to go beyond the status quo.

Gaming the System

SLOs tied to performance reviews or strict mandates could tempt teams to manipulate results or take shortcuts. Examples include only monitoring during off-peak hours, not reporting certain failures, or focusing solely on the measured service at the expense of other system health factors.

The Overuse of SLOs

A common symptom of SLO overuse is when users start requesting SLOs for minor system components. For example, we had engineering teams asking "Can we get an SLO for a single node availability in the Kubernetes cluster?"

But do users directly interact with Node X? Will they notice if it has additional latency during maintenance? Likely not. SLOs should focus on end user journeys, not internal components.

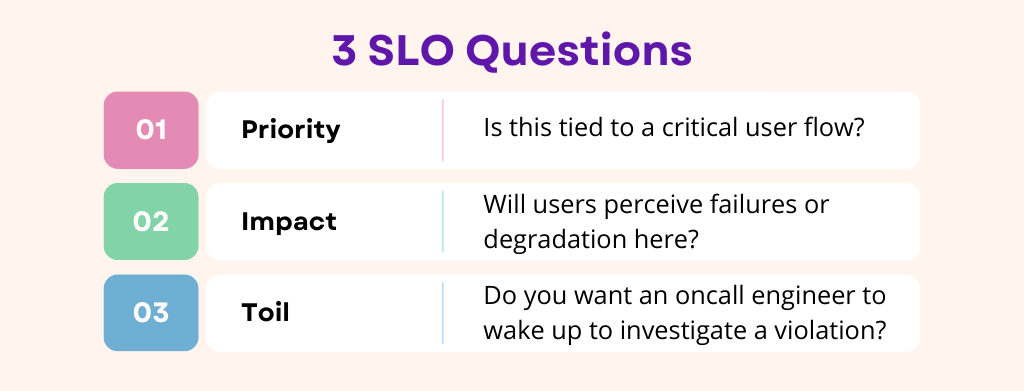

Before defining a new SLO, ask yourself:

- Is this tied to a critical user flow?

- Will users perceive failures or degradation here?

- Do you want an oncall engineer to wake up to investigate a violation?

SLOs are not needed for every system or service. Stay disciplined about applying them only where they offer real user value. Focus SLOs where they can make the biggest difference to customers.

SLO No-Op

Another common anti-pattern is SLOs that are monitored but rarely acted upon. Engineers review dashboards, get alerts when thresholds are crossed, but take little concrete action in response besides writing post-mortems.

For example, a team may have an SLO requiring 99.95% uptime for a login service. They get paged when the service goes down, document the incident, but don't investigate root cause or take preventative actions. The same outage then happens again the following month due to the same undiscovered bug.

This "no-op" approach to SLOs generates visibility without accountability. We go through the motions of measuring reliability without doing anything meaningful to improve it.

Closing Thoughts

In summary, SLOs can provide useful signals when implemented carefully, but also risk becoming burdensome and misleading metrics theater. Stay pragmatic - SLOs are a tool to meet business goals, not goals themselves.

Note for the organization leaders: keep it human-centric. SLOs should enlighten and empower engineers, not pressure and control them. Keep reflecting on whether your SLO investment pays dividends in better user experiences.

Member discussion